Singapore

Financial Literacy for Migrant Workers Through Conversational AI

- Status

- Completed Research

- Research Year

- 2024-25

Our project explores the potential of leveraging conversational AI to address the financial literacy needs of migrant workers. With the prolific use of large language models (LLMs) in social applications, we aim to investigate their viability in promoting financial literacy among this often underserved population.

Migrant workers face unique challenges in managing their finances, often due to language barriers, cultural differences, and limited access to financial education resources. By harnessing the power of conversational AI, we seek to develop a scalable and personalized solution that can adapt to the diverse needs of migrant workers.

Our research will focus on understanding the specific financial literacy gaps experienced by migrant workers (including scams) and identifying how conversational AI can effectively bridge those gaps. We will explore the potential of multi-lingual and multi-cultural AI systems that can accommodate different saving habits and provide personalized financial guidance.

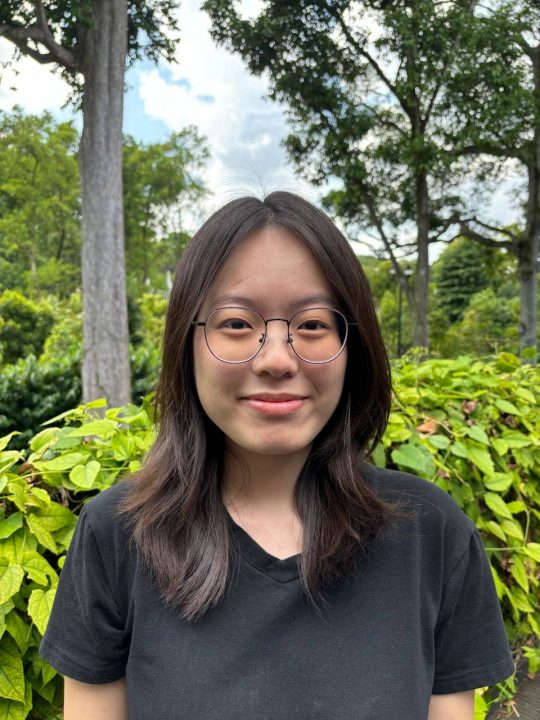

Researchers

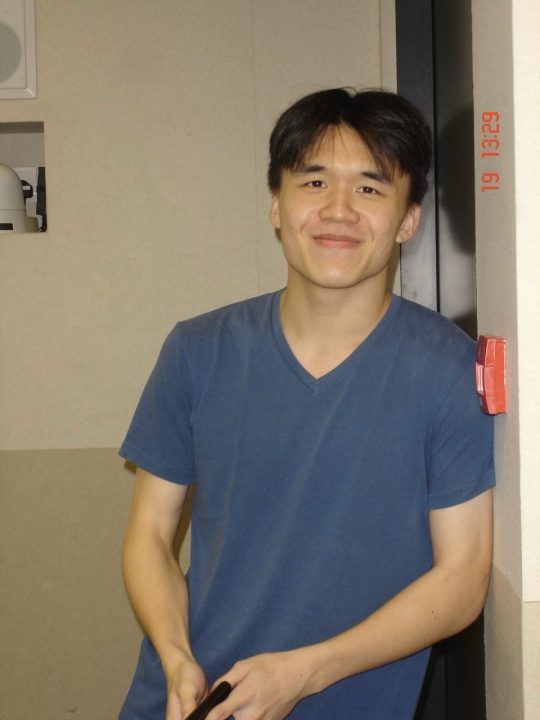

Mentors

-

Singapore Management University

Andrew Koh

Senior Lecturer of Computer Science, Singapore Management University